[ad_1]

This week we spent a day in New York Metropolis reviewing Amazon Internet Providers Inc.’s synthetic intelligence technique and progress with a number of AWS execs, together with Matt Wooden, vice chairman of AI on the firm. We got here away with a greater understanding of AWS’ AI strategy past what was laid out at re:Invent 2023.

We additionally met individually with a senior expertise chief at a big monetary establishment to gauge buyer alignment with AWS’ narrative. Though tales from each camps left us with a optimistic impression, the survey information reveals OpenAI and Microsoft Corp. proceed to carry the AI momentum lead, a place the pair usurped from AWS, which traditionally was first to market with cloud improvements. AWS’ technique to take again the lead includes a multipronged strategy inside its three-layer stack of infrastructure, AI tooling and up-the-stack purposes.

On this Breaking Evaluation, we evaluation the takeaways from our AI discipline journey to New York Metropolis. We’ll share survey information from Enterprise Know-how Analysis on generative AI adoption and key obstacles. We additionally place these in context to the current scathing evaluation of Microsoft’s safety practices by the federal authorities’s Cyber Security Overview Board and we’ll share our view of AWS’ AI alternatives and challenges going ahead.

Energy Legislation of Gen AI performs out

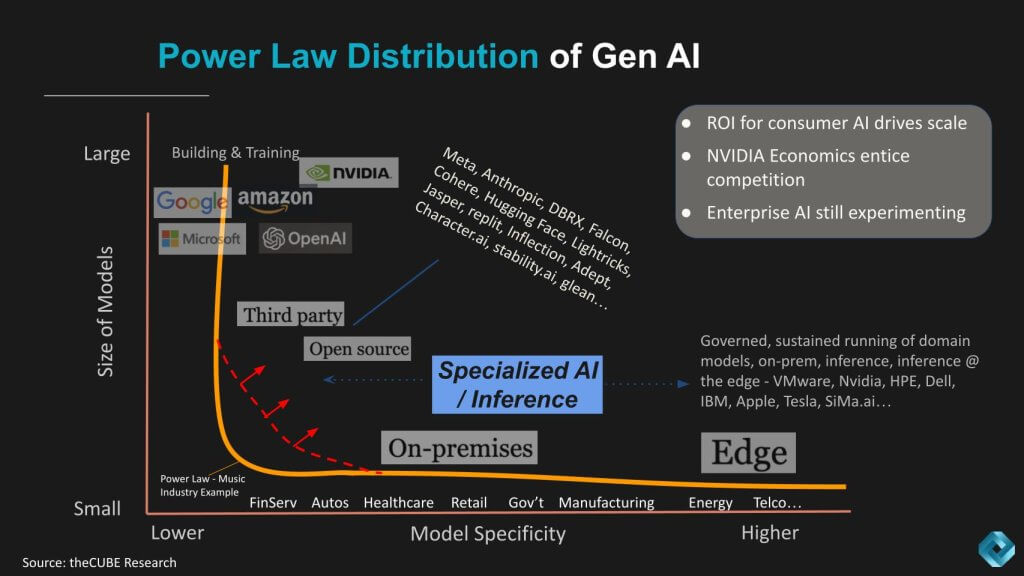

Early final 12 months, theCUBE Analysis revealed the Energy Legislation of Gen AI proven under. The essential idea is that whereas some industries have a handful of dominant leaders and a protracted tail of bit gamers, we see the gen AI curve in another way. On the chart we present mannequin dimension on the vertical axis and area specificity on the horizontal aircraft. And although just a few giants such because the hyperscalers will dominate the coaching area, numerous use circumstances are rising and can proceed to take action with higher business specialization.

Moreover, open-source fashions and well-funded third events will pull the torso as much as the fitting as proven within the pink line. And they’re going to assist the premise of domain-specific fashions by serving to clients steadiness mannequin dimension, complexity, value and greatest match.

AWS’ generative AI stack

We weren’t capable of get hold of the deck Matt Wooden shared with us, so we’ll revert to an annotated model (by us) of a slide AWS Chief Govt Adam Selipsky confirmed at re:Invent final 12 months.

The diagram above depicts AWS’ three-layered gen AI stack comprising core infrastructure for coaching basis fashions and doing cost-effective inference. Constructing on high of that layer is Bedrock, a managed service offering entry to instruments that leverage giant language fashions, and on the high of the stack, there’s Q, Amazon’s effort to simplify the adoption of gen AI with what are basically Amazon’s model of copilots.

Infrastructure on the core

Let’s speak about a few of the key takeaways from every layer of the stack. First, on the backside layer, there are three predominant areas of focus: 1) AWS’ historical past in machine studying and AI, significantly with SageMaker; 2) its customized silicon experience and three) compute optionality with roughly 400 cases. We’ll take these so as.

Amazon emphasised that it has been doing AI for a very long time with SageMaker. SageMaker, whereas extensively adopted and highly effective, can also be complicated. Getting essentially the most out of SageMaker requires an understanding of complicated ML workflows, choosing the proper compute occasion, integration into pipelines and data expertise processes and different nontrivial operations. A big proportion of AI use circumstances might be addressed by SageMaker. AWS in our view has a possibility to simplify the method of utilizing SageMaker by making use of gen AI as an orchestration layer to widen the adoption of its conventional ML instruments.

In silicon, AWS has a protracted historical past creating customized chips with Graviton, Trainium and Inferentia. AWS affords so many EC2 choices that may be complicated, however these choices enable clients to optimize cases for workload greatest match. AWS after all affords graphics processing models from Nvidia Corp. and claims it was the primary to ship H100s, and it is going to be the primary to market with Blackwell, Nvidia’s superchip.

AWS’ technique on the core infrastructure layer is supported by key constructing blocks comparable to Nitro and Elastic Cloth Adapter to assist a variety of XPU choices with safety designed in from the beginning.

Bedrock and basis mannequin optionality

Transferring up the stack to the second block, that is the place a lot of the eye is positioned as a result of it’s the layer that competes with OpenAI. A lot of the business was unprepared for the ChatGPT second. AWS was no exception in our view. Though it had Titan, its inner basis mannequin, it made the choice that providing a number of fashions was a greater strategy.

A skeptical view is likely to be it is a case of “for those who can’t repair it, characteristic it,” however AWS’ historical past is to each associate and compete. Snowflake versus Redshift is a basic instance the place AWS serves clients and income from the adoption of each.

Amazon Bedrock is the managed service platform by which clients entry a number of basis fashions and instruments to make sure trusted AI. We’ve superimposed on Adam’s chart above a number of basis fashions that AWS affords, together with AI21 Labs Ltd.’s Jurassic, Amazon’s personal Titan mannequin, Anthropic PBC’s Claude, maybe crucial of the group given AWS’ $4 billion funding within the firm. We additionally added Cohere Inc., Meta Platforms Inc.’s Llama, Mistral AI with a number of choices together with its “combination of consultants” mannequin and its Mistral Massive flagship, and at last Stability AI Ltd.’s Secure Diffusion mannequin.

And we might anticipate to see extra fashions sooner or later, together with probably Databricks Inc.’s DBRX. As properly, Amazon might be evolving its personal basis fashions. Final November it’s possible you’ll recall a story broke about Amazon’s Olympus, which is reportedly a 2 trillion-parameter mannequin headed up by the previous chief of Amazon Alexa, reporting on to Amazon.com Inc. CEO Andy Jassy.

Simplifying gen AI adoption with purposes

Lastly the highest layer is Q, an up-the-stack software layer designed to be the straightforward button with out-of-the-box gen AI for particular use circumstances. Examples at present embody Q for provide chain or Q for information with connectors to well-liked platforms comparable to Slack and ServiceNow. Basically consider Q as a set of gen AI assistants that AWS is constructing for patrons that don’t need to construct their very own. AWS doesn’t use the time period “copilots” in its advertising as that could be a time period Microsoft has popularized, however mainly that’s how we have a look at Q.

Large gen AI adoption – Microsoft and OpenAI dominant

The chart under reveals information from the very newest ETR expertise spending intentions survey of greater than 1,800 accounts. We obtained permission from ETR to publish this forward of its webinar for personal shoppers. The vertical axis is spending momentum or Web Rating on a platform. The horizontal axis is presence within the information set measured by the overlap inside these 1,800-plus accounts. The pink line at 40% signifies a extremely elevated spend velocity. The desk insert within the backside proper reveals how the dots are plotted – Web Rating by N within the survey.

Level 1: OpenAI and Microsoft are off the charts by way of account penetration. Open AI has the No. 1 Web Rating at almost 80% and Microsoft leads with 611 responses.

Level 2: AWS is primarily represented within the survey by SageMaker. AWS and Google inside the AI sector are a lot nearer than they’re within the general cloud section. AWS is much forward of Google after we present cloud account information, however Google seems to be closing the hole. Knowledge on Bedrock isn’t at present out there within the ETR information set. Each AWS and Google have sturdy Web scores and a really stable presence within the information set however the compression between these two names is notable.

Level 3: Take a look at the strikes each Anthropic and Databricks are making within the ML/AI survey. Anthropic particularly has a internet rating rivaling that of OpenAI, albeit with a a lot a lot smaller N. However that’s AWS’ most essential LLM associate. Databricks as properly is shifting up and to the fitting. Our understanding is that ETR might be including Snowflake on this sector. Snowflake, it’s possible you’ll recall, basically containerizes Nvidia’s AI stack as one among its predominant performs in AI, so it is going to be attention-grabbing to see the way it fares within the days forward.

Level 4: Within the January survey, Meta’s Llama was forward of each Anthropic and Databricks on the vertical axis and it’s attention-grabbing to notice the diploma to which they’ve swapped positions…. We’ll see if that pattern line continues.

AI device variety allows the most effective strategic match

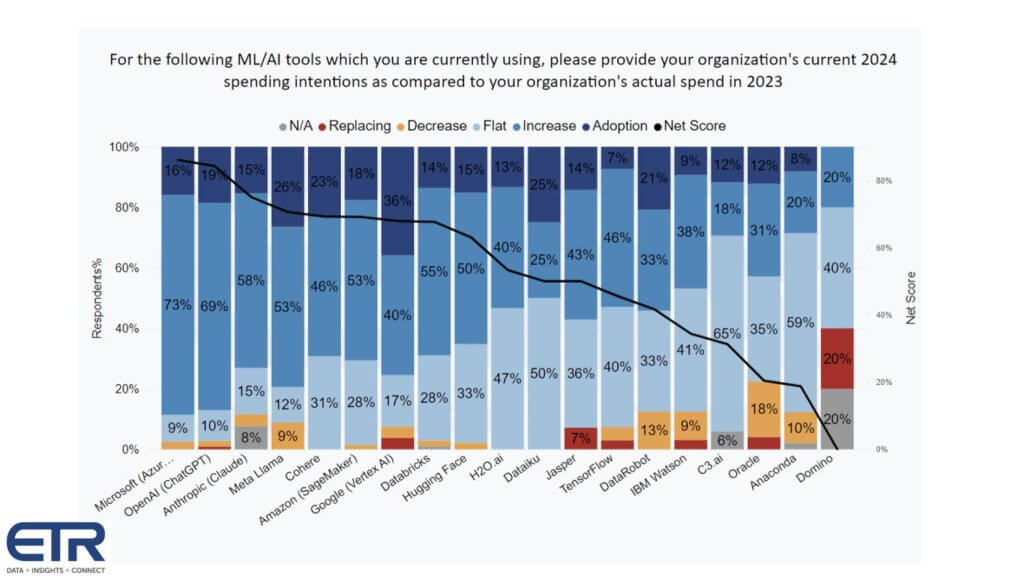

We obtained the chart under after we recorded our video however needed to share this information as a result of it gives a extra detailed and granular view than the earlier chart. It breaks down the Web Rating methodology. Bear in mind, Web Rating is a measure of spending velocity on a platform. It measures the % of shoppers in a survey which are: 1) adopting a platform as new; 2) rising spend by 6% or extra; 3) spending flat at +/- 5%; 4) reducing spend by 6% or worse; and 5) churning. Web Rating is calculated by subtracting 4+5 from 1+2 and it displays the web proportion of shoppers spending extra on a platform.

Beneath we present the info for every of the ML/AI instruments within the ETR survey.

The next factors are noteworthy:

- A Web Rating of 40% or higher is taken into account extremely elevated.

- The dominant scores of Microsoft and OpenAI are notable given the big Ns we confirmed within the earlier chart.

- Anthropic’s momentum can also be spectacular however its presence within the survey (Ns) is 1/sixth that of OpenAI.

- We don’t at present have information for Amazon Bedrock, however it’s doubtless a lot of the Anthropic adoption is thru AWS.

- The highest instruments present just about no churn, with the tiny exception of OpenAI and Google Vertex.

- The identical is true for spending decreases aside from Llama, which is exhibiting small portion of shoppers spending much less.

- The highest 9 instruments all present the proportion of shoppers spending extra is bigger than these spending flat and spending much less mixed – an indication of an immature market with a number of momentum.

There may be a lot dialogue within the business relating to the eventual commoditization of LLMs. We’re nonetheless formulating our opinion on this and gathering information, however anecdotal discussions with clients recommend they see worth in optionality and variety, and our view is that so long as innovation and “leapfrogging” proceed, basis fashions could consolidate however commoditization is of decrease chance.

Gen AI adoption is speedy however dangers stay

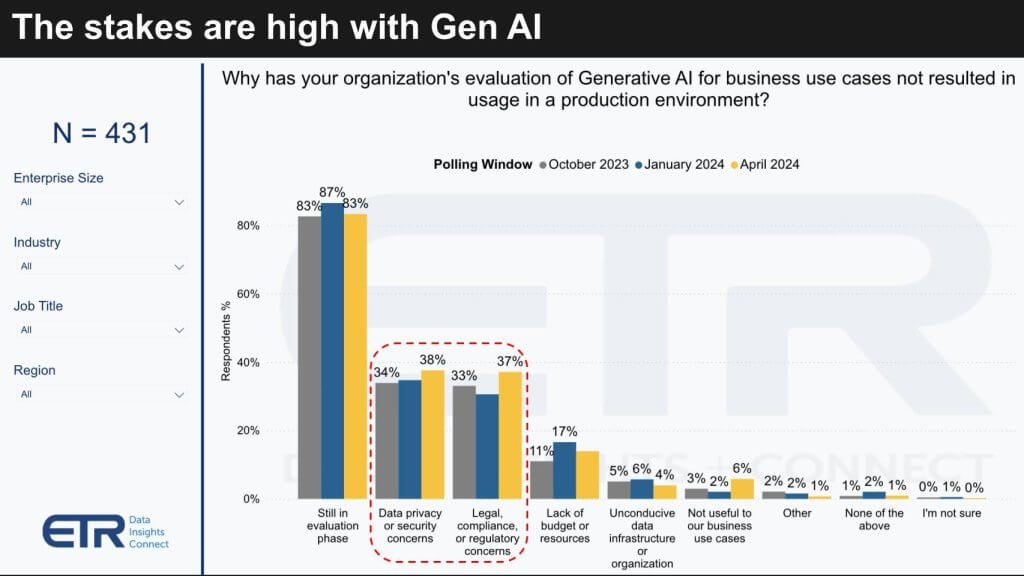

Let’s have a look at another ETR information and dig into a few of the challenges related to bringing Gen AI into manufacturing. In a March survey of just about 1,400 IT choice makers, almost 70% stated their corporations have put some type of gen AI into manufacturing. The chart under reveals the 431 that haven’t gone into manufacturing and asks them why.

The No. 1 cause is that they’re nonetheless evaluating, however the actual inform is the diploma to which information privateness, safety, authorized, regulatory and compliance issues are obstacles to adoption. That is no shock, however in contrast to the times of massive information, the place many deployments went unchecked, most organizations at present are being way more conscious with AI. However we imagine clients have blindspots and are taking over dangers that aren’t totally understood.

Microsoft’s safety posture is a significant buyer threat

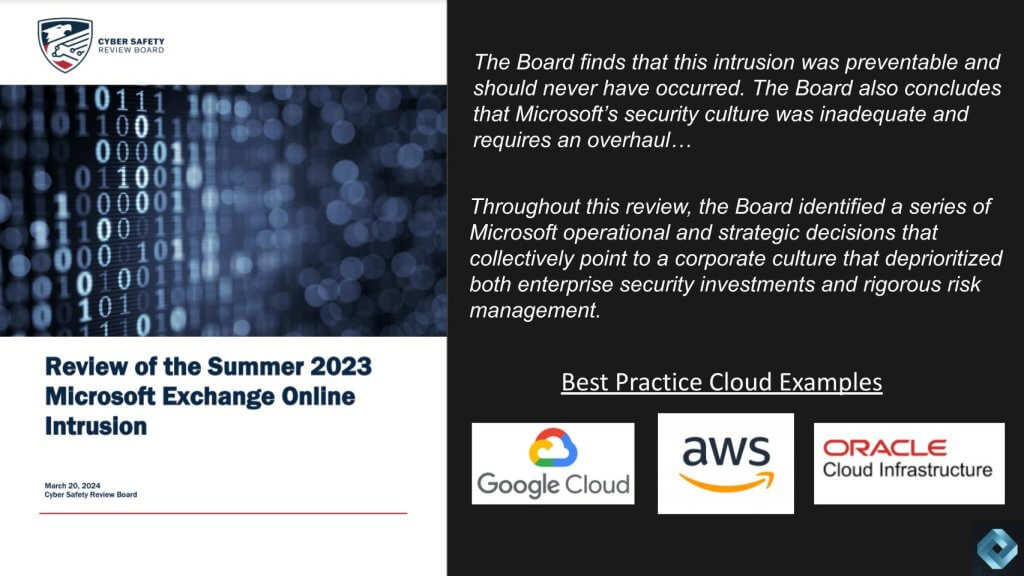

Given the issues about privateness and safety one can’t assist however mirror on the current report initiated by the top of Homeland Safety to analyze the hack on Microsoft one 12 months in the past that was traced to China. The breach compromised the accounts of key authorities officers, together with the commerce secretary.

The federal government’s report completely eviscerated Microsoft for prioritizing characteristic improvement over safety, utilizing outdated safety practices, failing to shut identified gaps and poorly speaking what occurred, why it occurred and the way it is going to be addressed. This story was extensively reported, however it’s value noting within the context of AI adoption. Listed below are just a few key callouts from that report:

The Board finds that this intrusion was preventable and may by no means have occurred. The Board additionally concludes that Microsoft’s safety tradition was insufficient and requires an overhaul…

All through this evaluation, the Board recognized a collection of Microsoft operational and strategic selections that collectively level to a company tradition that deprioritized each enterprise safety investments and rigorous threat administration.

The report additionally evaluated different cloud service suppliers and particularly referred to as out Google, AWS and Oracle Corp. The report gave particular best-practice examples of how they strategy safety and left the reader believing that these corporations have much better safety in place than does Microsoft.

Why is that this so related within the context of gen AI? It’s as a result of the cloud has grow to be the primary line of protection in cybersecurity. In cloud there’s a shared accountability mannequin that the majority clients perceive and it seems that Microsoft isn’t dwelling as much as its finish of the cut price.

When you’re a CEO, chief info officer, chief info safety officer, board member, profit-and-loss supervisor — and also you’re a Microsoft store — you’re counting on Microsoft to do its job. In keeping with this report, Microsoft is failing you and placing your corporation in danger. That is particularly regarding due to the ubiquity of Microsoft and its presence in just about each market, and the astounding AI adoption information we shared above. Prospects should start to ask themselves if the comfort of doing enterprise with Microsoft is exposing dangers that have to be mitigated.

CEO Satya Nadella saved Microsoft from irrelevance when he took over from Steve Ballmer and initiated a cloud name to motion. Based mostly on this detailed report, Microsoft has violated the belief of its clients, a lot of which at the moment are placing their AI methods in Microsoft’s palms. It is a a wakeup name to enterprise expertise executives and, if ignored, it may spell catastrophe for giant swaths of shoppers.

Learnings from the AWS AI briefing

Coming again to AWS.… As you may see within the information, AWS is doing properly, however for those who imagine AI is the brand new subsequent factor – which we do – then: 1) the sport has modified and a couple of) AWS has a number of work to do.

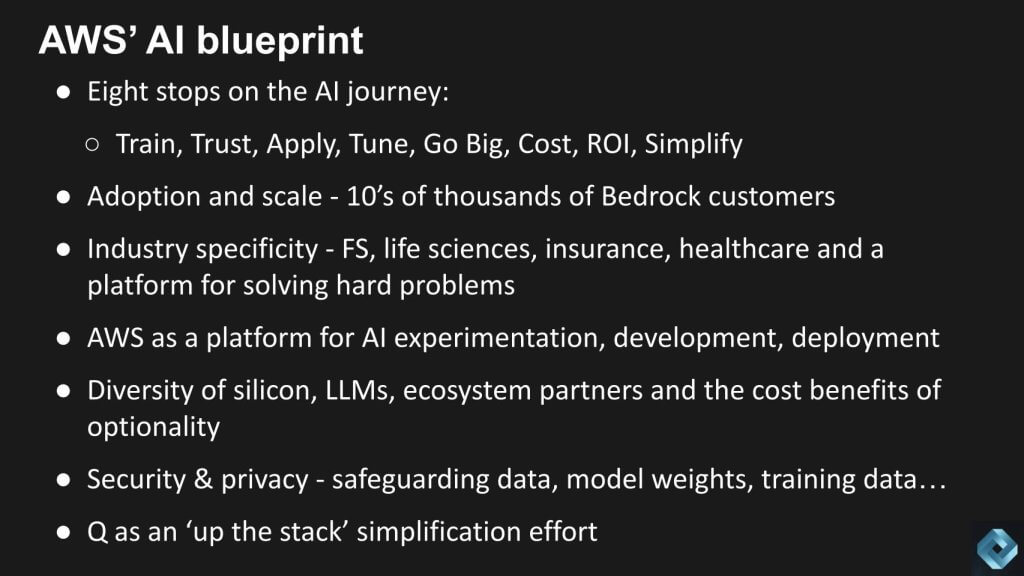

So what are a few of the themes we heard this week from AWS?

Matt Wooden laid out an eight step journey they see from buyer AI initiatives. These are usually not linear steps essentially, however they’re key milestones and aims that clients are initiating.

Step 1: coaching. Let’s not spend an excessive amount of time right here as a result of most clients are usually not doing hard-core coaching. Reasonably, they begin with a pretrained mannequin from the likes of Anthropic or Mistral. AWS did make the declare that the majority main basis fashions (apart from Open AI’s) are predominantly educated on AWS. Anthropic is an apparent instance, however Adobe Firefly was one other one which caught our consideration based mostly on final week’s Breaking Evaluation.

Step 2: IP retention and confidentiality. Maybe crucial start line. Regardless of that ETR information we simply confirmed you, many people have banned the usage of OpenAI instruments internally. However we all know for a proven fact that builders, for instance, discover OpenAI tooling to be higher for a lot of use circumstances comparable to code help. For instance, we all know devs whose firm has banned the usage of ChatGPT for coding, however relatively than use Code Whisperer (for instance) they discover OpenAI tooling so significantly better that they obtain the iPhone app and do it on their smartphone. This needs to be a priority for CISOs. Buyer needs to be asking their AI supplier if people are reviewing outcomes. What sort of encryption is used? How is safety constructed into managed companies? How is coaching information protected? Can information be exfiltrated and if that’s the case how? How are accesses to information flows being fenced off from the skin world and even the cloud supplier?

Step 3: applying AI. The objective right here is to extensively making use of gen AI to the whole enterprise to drive productiveness and effectivity. The truth is that buyer use circumstances are piling up. The ETR survey information tells us that 40% of shoppers are funding AI by stealing from different budgets. The backlog is rising and there’s a number of experimentation happening. Traditionally, AWS has been an important place to experiment, however from the info, OpenAI and Microsoft are getting a number of that enterprise at present. AWS’ rivalry is that different cloud suppliers are married to a restricted variety of fashions. We’re not satisfied. Clearly Google needs to make use of its personal fashions. Microsoft prioritizes OpenAI, after all, however it has added different fashions to its portfolio. That is one the place solely time will inform. In different phrases, does AWS have a sustainable benefit over different gamers with basis mannequin optionality or, if it turns into an essential criterion, can others broaden their partnerships additional and neutralize any AWS benefit?

Step 4: consistency and nice tuning. Attending to constant and fine-tuned retrieval-augmented era fashions, for instance. Matt Wooden talked concerning the “Swiss cheese impact” that AWS is addressing. It’s a case the place if a RAG has information, it’s fairly good, however the place it doesn’t, it’s like a gap in Swiss cheese, so the fashions will hallucinate. Filling these holes or avoiding them is one thing that AWS has labored on, based on the corporate. And it is ready to decrease poor-quality outputs.

Step 5: fixing complicated issues. For instance, getting deeper into business issues in well being care, monetary companies, drug discovery and the like. Once more, these are usually not linear buyer journeys, relatively they’re examples of initiatives AWS helps clients deal with. Most clients at present are usually not within the place to assault these onerous issues, however these business leaders with deep pockets are ready to take action, and AWS needs to be their go-to associate.

Step 6: decrease, predictable prices. AWS didn’t name this value optimization, however that’s what that is. It’s an space the place AWS touts its customized silicon. Whereas rivals at the moment are designing their very own chips, as we’ve reported for years, AWS has an enormous head begin on this regard from its Annapurna acquisition of 2015.

Step 7: widespread profitable use circumstances. The information tells us at present that the most typical use circumstances are doc summarization, picture creation, code help and mainly the issues we’re all doing with ChatGPT. That is comparatively simple and, if performed so with safety and safety, it may yield quick return on funding.

Step 8: simplification. Making AI simpler for people who don’t have the sources or time to do it themselves. Amazon’s Q is designed to assault this initiative with out-of-the-box gen AI use circumstances, as we described earlier. We don’t have agency information on Q adoption at this level however are engaged on getting it.

Another fast takeaways from the conversations:

- We met with business consultants at AWS in monetary companies and cross business execs who shared quite a few use circumstances in insurance coverage, finance, media, well being care, you identify it — proper consistent with the ability regulation we mentioned earlier.

- AWS is positioning itself as a platform to assist scale and it has a robust monitor file in doing so.

- Bedrock adoption could be very sturdy with tens of hundreds of shoppers.

- And the final three on the chart above we’ve touched on a bit – silicon and LLM variety – ecosystem companions and firms comparable to Adobe coaching on AWS with merchandise comparable to Firefly, which we coated final week.

- Safety, privateness and controls.

- Up-the-stack purposes with Q. Our perception is Q remains to be a piece in course of. Packaged apps are usually not AWS’ wheelhouse, however Q is a begin and maybe gen AI makes it simpler for it to enter upstream.

AWS AI going ahead

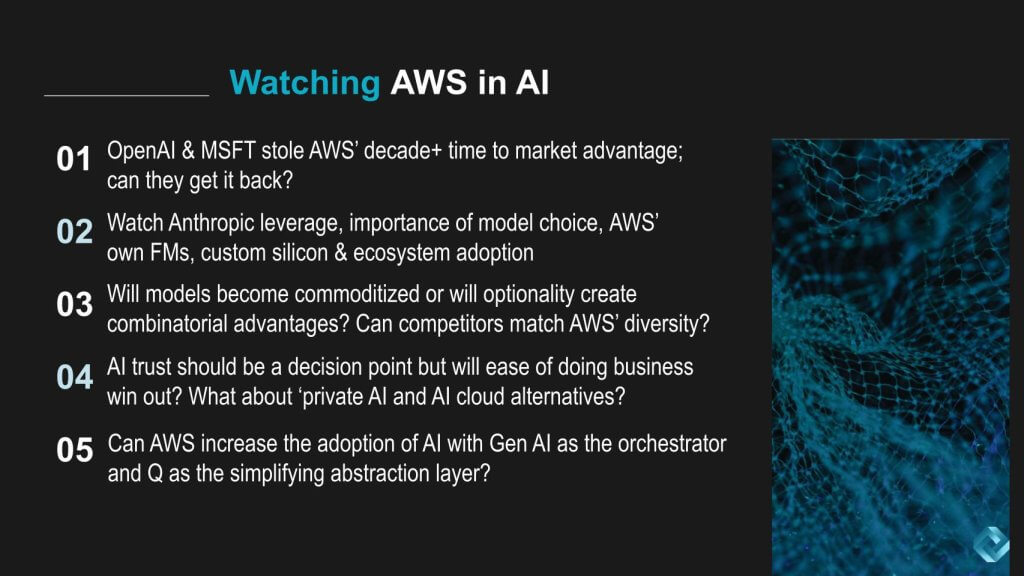

OpenAI and Microsoft stole AWS’ decade-plus time-to-market benefit; can it get it again? To take action, it is going to attempt to replicate its inner innovation with ecosystem companions to supply buyer selection and promote instruments and infrastructure round them.

Look ahead to Anthropic leverage, even closing the loop again to silicon. In different phrases, can the connection with Anthropic make AWS’ customized chips higher? And what about Olympus – look ahead to that functionality from Amazon’s inner efforts this 12 months. How will mannequin selection play into AWS’ benefit and can it’s sustainable?

Will fashions grow to be commoditized or will optionality create combinatorial benefits? Can rivals match AWS’ variety if optionality turns into a bonus?

AI belief needs to be a vital choice level however will ease of doing enterprise win out? What about non-public AI and AI cloud options?

Talking of GPU cloud options, our associates at Huge Knowledge Inc. are doing very properly on this area. At Nvidia GTC, we attended a lunch hosted by Huge with Genesis Cloud that was very informative. These corporations are actually taking off and positioning themselves as a purpose-built AI cloud to compete with the likes of AWS.

So we requested Huge for a listing of the highest different clouds it’s working with along with Genesis — names comparable to Core42, CoreWeave, Lambda and Nebul are elevating tons of cash and gaining traction. Maybe all of them gained’t make it, however some will to problem the hyperscale leaders. How will that influence provide, demand and adoption dynamics.

Can AWS enhance the adoption of AI with gen AI because the orchestrator and Q because the simplifying abstraction layer? In different phrases, can gen AI speed up AWS’ entry into the applying enterprise… or will its technique proceed to be enabling its clients to compete up the stack? Likelihood is the reply is “Each.”

What do you suppose? Does AWS’ technique resonate with you? Are you involved about Microsoft’s safety posture? Will it make you rethink your IT bets? How essential is mannequin variety to your corporation? Does it complicate issues or will let you optimize for the number of use circumstances you may have in your backlog?

Tell us.

Be in contact

Your vote of assist is essential to us and it helps us preserve the content material FREE.

One click on under helps our mission to offer free, deep, and related content material.

Be a part of our neighborhood on YouTube

Be a part of the neighborhood that features greater than 15,000 #CubeAlumni consultants, together with Amazon.com CEO Andy Jassy, Dell Applied sciences founder and CEO Michael Dell, Intel CEO Pat Gelsinger, and lots of extra luminaries and consultants.

THANK YOU

[ad_2]